Amd Ryzen Issues

Shits on fire

After building and running my home server setup I kept getting alerts and seeing issues with the CPU performance.

Now, there is plenty around the internet surrounding the 5900X processor with regards to heat and performance. The gist of it is that it’s “totally normal for it to operate at 95C”.

Ok, first - I get it. It’s technically a gaming CPU with a TDP of 105W. So if you’re blasting some game it can handle temps while under extreme loads. The assumption there is those loads are peaky and intermittent. So when you are done, it comes back down to a normal level. Also it does say on AMD’s site that the recommended cooling for it is water cooling.

Now we have a “hot as shit” CPU for our server where the loads are typically flat and not don’t really spike at all - should be fine right? Here’s where the fun begins.

So WTF?

I started to build out Home Assistant and wanted to have more specific graphs and monitoring using Grafana for it. Mostly to monitor refrigerator temperatures and energy usage. More on Home Assistant setup here.

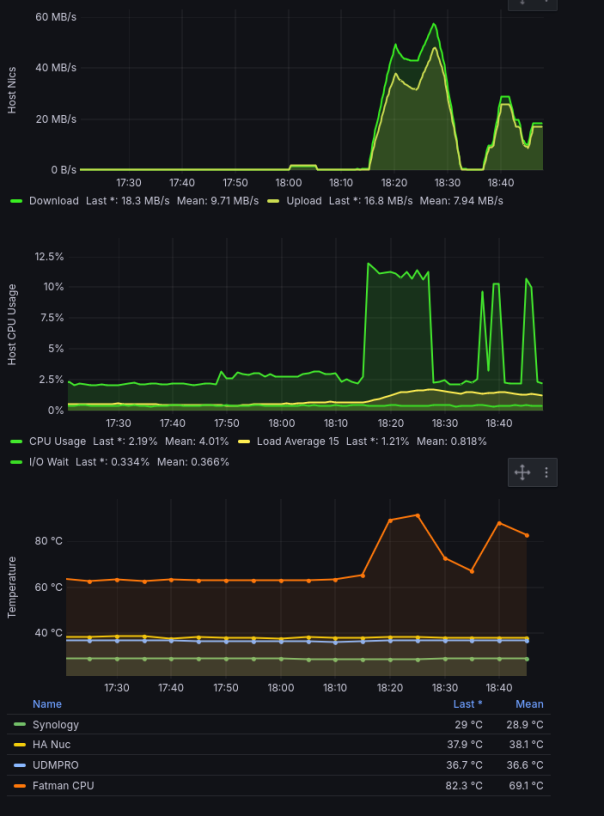

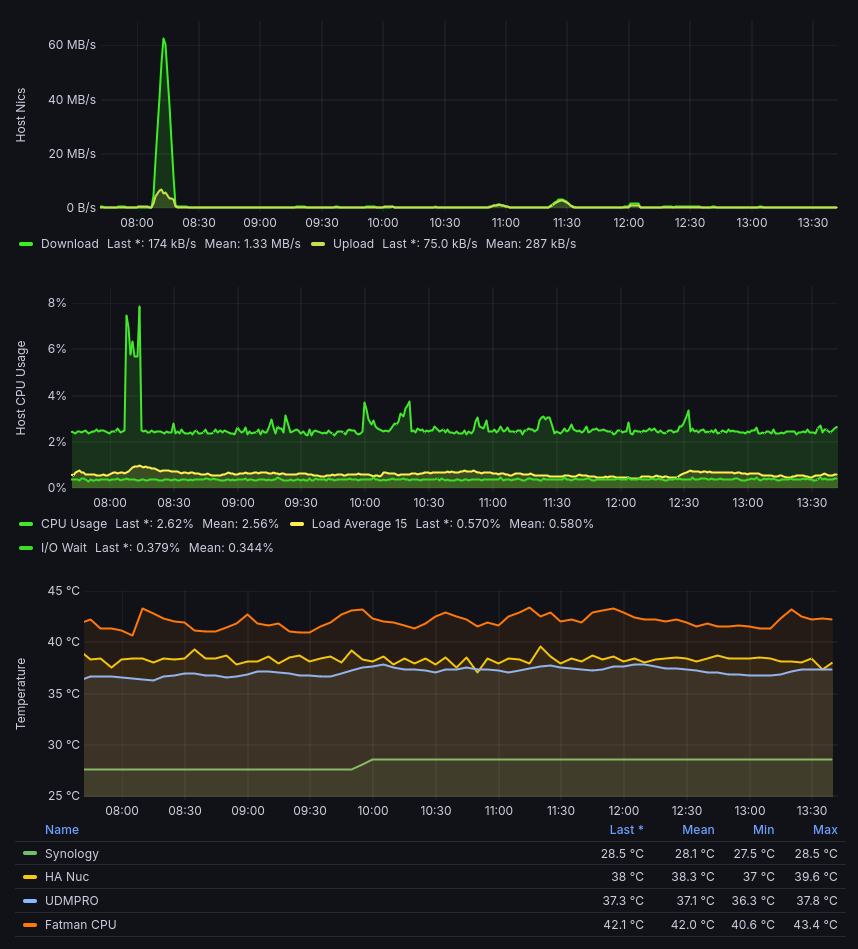

So naturally, I wanted an all in one dashboard to see what’s going on in the whole system. I have already built a bunch of Grafana dashboards for my home setup but I wanted some specific to Home Assistant servers, devices, network, etc. In doing that I built a specific dashboard to see the server host metrics in detail and this is what pops up under some normal operations:

First Issues

What I saw when I first discovered the issues.

Ok, so what do these graphs tell us? Everything in the same rack but one server seems to be running way hotter at “idle”. Even worse, it spikes up more than 20C with only 12% CPU load.

Servers typically have a load level they more or less idle at. This is why percentile metrics are used for anomaly detection so often. We have standard load of X and when load is in that 95th percentile we alert. Cool. What’s confusing here is why the idle load is 20C more than other servers in the rack?

AMD Ryzen Issues?

Like most people seeing an issue like this you start down the troubleshooting path. Because I’m running all my K3s nodes and others in KVM using libvirt, maybe the VM overhead is insane and causing an issue? With that maybe my rack configuration with it being in the middle and 2U not having amazing airflow, I could move it to the top of the rack where I put 2 stronger fans to pull air out the top. Also pop the hood on the server. Basically, what can I do to get this thing as cool as possible.

Top of rack without VM’s running:

k10temp-pci-00c3

Adapter: PCI adapter

Tctl: +33.5°C

Tccd1: +37.5°C

Tccd2: +33.2°C

acpitz-acpi-0

Adapter: ACPI interface

temp1: +16.8°C (crit = +20.8°C)

temp2: +16.8°C (crit = +20.8°C)

nouveau-pci-0600

Adapter: PCI adapter

GPU core: 1.06 V (min = +0.80 V, max = +1.19 V)

temp1: +46.0°C (high = +95.0°C, hyst = +3.0°C)

(crit = +105.0°C, hyst = +5.0°C)

(emerg = +135.0°C, hyst = +5.0°C)During start up:

k10temp-pci-00c3

Adapter: PCI adapter

Tctl: +63.9°C

Tccd1: +57.0°C

Tccd2: +65.5°C

acpitz-acpi-0

Adapter: ACPI interface

temp1: +16.8°C (crit = +20.8°C)

temp2: +16.8°C (crit = +20.8°C)

nouveau-pci-0600

Adapter: PCI adapter

GPU core: 1.06 V (min = +0.80 V, max = +1.19 V)

temp1: +45.0°C (high = +95.0°C, hyst = +3.0°C)

(crit = +105.0°C, hyst = +5.0°C)

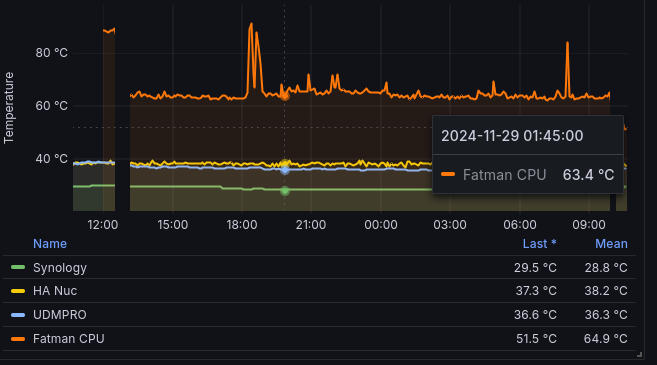

(emerg = +135.0°C, hyst = +5.0°CNow that grafana is back up, let it run again after moving the server to the top of rack and popping the hood to let the fans help.

Comparing Temperatures

Fun. Didn’t make any change. Alright, back to searching for answers. I came across a few reddit threads 1, 2, 3, 4. This issue seems to be across the board with anyone doing any type of virtualization with a 5900X. Some of the “working” responses:

- Set voltage lower in the bios.

- Disable overclocking.

- Check ambient temps.

- Make sure water pump in AIO(all in one) coolers are functioning properly.

- Thermal Paste and CPU seating(Calling BS on this considering my server doesn’t overheat then crash.)

- AMD Ryzen Master Utility

Here’s what’s wrong with most of these:

Voltage

Setting the voltage to be lower than default can help with this. Downside is setting it too low and cause the system to keep rebooting as it can’t power the boot sequence(ask how I know). This one’s a fail.

Disable overclocking

This one makes the most sense. Mainly the issue here is that every manufacturer and chipset have different features, display screens, hidden menus. Leaves you deciphering all the options and trying things. Most boards don’t seem to have a blanket “disable” overclocking feature. More on this later.

Ambient Temps

This makes some sense, but only if the temps in the rack all match the highest. In my case the server is easily 20C higher than the rest of the devices. Fail.

Water Pump / CPU Cooler

Again, makes some sense with the issue. Since I don’t have a water cooler - I just check the fan settings in bios. It’s recognized, working, and pretty much at full blast. If I did have an AIO, this could be super confusing as it would look like it wasn’t functioning properly. Still, the goal is to try everything out before buying new parts to attempt to solve the issue.

Thermal Paste / CPU Seating

This I call bullshit on anyone saying this is an issue. In those reddit threads people are gaming on VM’s with GPU pass-through and working fine outside the temps being super high. Although, in a gaming setting I’m not sure this is operating outside expected performance.

AMD Ryzen Master Utility

Not sure about this - I assumed it was some windows app that lets you change the clock and voltages on demand from the OS like MSI Afterburner for GPU’s.

Ok, so what worked and how?

First thing I needed to do was have a way to test without needing grafana. The setup now pulls metrics from two systems for the host machine: telegraf and kubernetes. Both are setup to be pulled by prometheus which is on the k3s cluster. The telegraf app has a lot of configuration it can use. One of them is polling lm_sensors. That’s what I used above to grab the temperature data by running sudo sensors. Cheap and easy was to run it in a watch to just look at it as it changed, see if it went up or down - watch -n 5 sensors. Works for simple eyeball check but not for something we can compare data.

tools

So I wrote a few things to help this.

- a bash script to poll the data and write it to a file.

- a go app to take that output file and create a graph with the data.

All my homelab stuff is in private repos for obvious reasons so I have a homelab-public repo that has all this in there.

Pretty simple, run the bash script with whatever settings you want. Take the output file and feed it to the go app. Open the output of that - an html file - and look at the data.

AMD Core Performance Boost (CPB)

Now to the nuts and bolts of this whole write up. AMD Turbo Core a.k.a. AMD Core Performance Boost (CPB) is a dynamic frequency scaling technology by AMD that allows the processor to adjust the operating frequency as needed for increased performance. For Ryzen it also has 2 other features: Precision Boost which bumps in 25 MHz increments and Extended Frequency Range which unlocks a wider boost range.

Essentially, if this is enabled your CPU will decide that since you’re not hitting the TDP of 105W and are under temperatures it deems safe, we can boost the CPU clock which also increases the temperature.

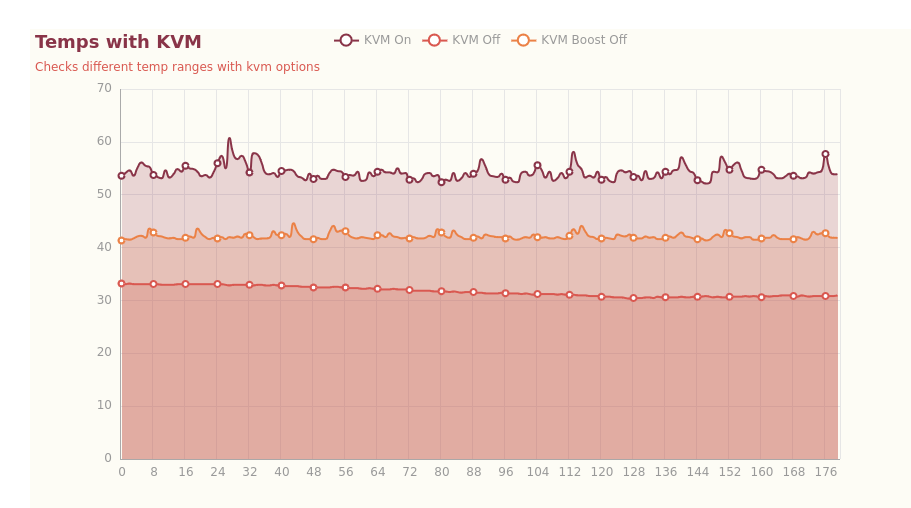

So I ran the bash script 3 times to generate these datasets:

- KVM On(All VM’s and apps running) - Default Bios settings with SVM enabled(CPU Virtualization)

- KVM Boost Off(No VM’s or apps running) - Default Bios settings with SVM enabled(CPU Virtualization)

- KVM On(All VM’s and apps running) - Default Bios settings with SVM enabled(CPU Virtualization) and Core Performance Boost diabled.

Then passing those 3 output files into the go app it rendered this:

Data from sensors testing

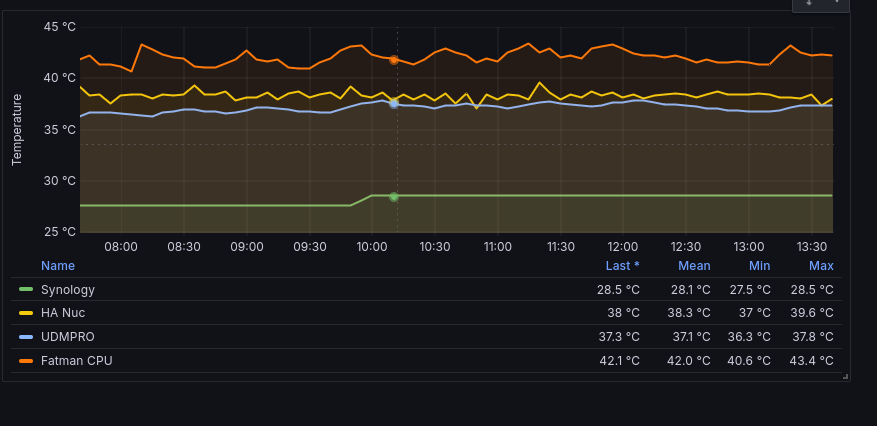

And in grafana:

After Boost disabled

Grafana Table Outputs:

| Server | Last | Mean | Min | Max |

|---|---|---|---|---|

| Synology | 28.5 | 28.5 | 28.5 | 28.5 |

| HA Nuc | 38.4 | 38.1 | 36.9 | 41 |

| UDMPRO | 37.5 | 37.5 | 36.6 | 38.1 |

| Fatman | 42.4 | 46.7 | 41.4 | 55.9 |

So the mean over max at this point is ~83.5%. That lowered the temperature on average 16.5% or 9.2C. And this was after spending all day testing this machine. Current it looks like this:

Current Temps

Current Temps with a spike

Temp doesn’t change when CPU is spiking now

Conclusion / TL;DR

Turn off AMD Core Precision Boost if you don’t need to let it overclock on demand. I may call this server a homelab server but really it’s a home/self-hosted server for things I use. Because of that I need it to be stable. The 5900X is a great processor for this type of setup. Great amount of CPU for the money. I just don’t need to overclock my servers.